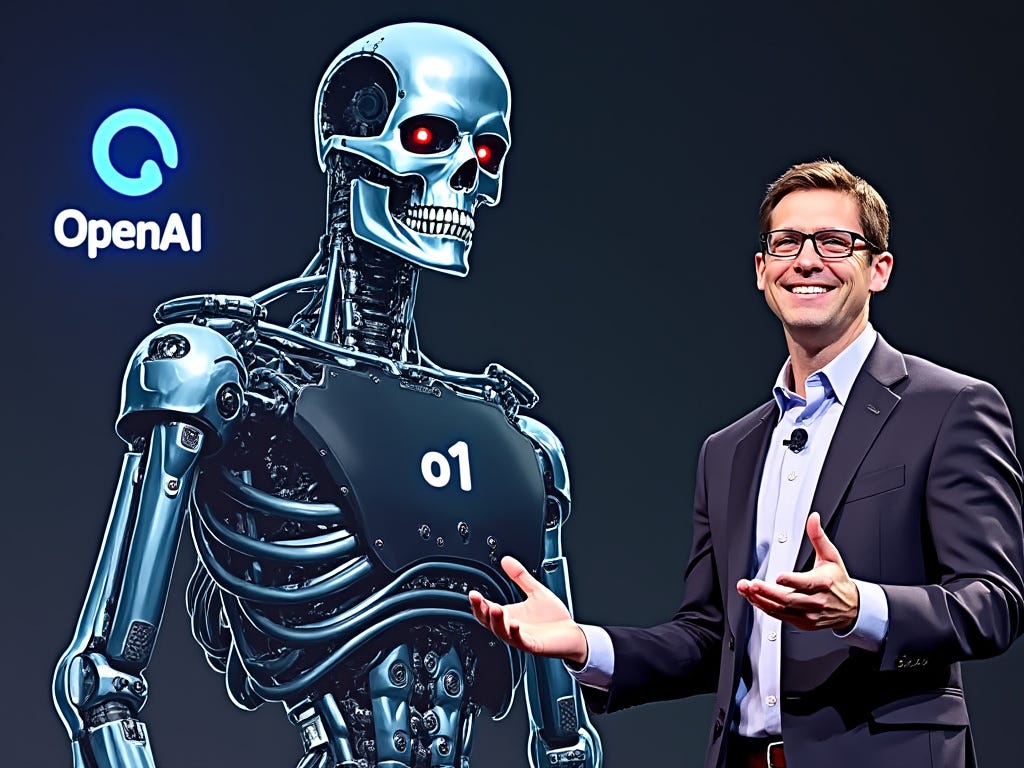

Did OpenAI Just Have Its Skynet Moment?

Are we enabling creation of AI systems that work against its own users?

Key Takeaways

OpenAI's o1 model hides its reasoning process, reducing transparency and user control.

The thinking process users see in the chat interface is actually censored summaries of what the model is actually putting out.

This approach departs from previous models where users could see and influence the AI's thinking patterns.

If we allow this approach to hold, we will enable the creation of AI systems that work against their users' interests.

A Tipping Point in AI Development

In a world increasingly reliant on artificial intelligence, OpenAI's recent release of its new model, o1, has stirred a lot of excitement. Many celebrate the model's advanced capabilities, but I can't help but question whether this marks a pivotal—and potentially perilous—moment in AI development. Has OpenAI just opened the door to the creation of Skynet, a system that works against its users' interests?

The Unveiling of OpenAI o1

OpenAI recently announced the release of o1, a new AI model touted for its superior reasoning and problem-solving abilities. According to OpenAI, o1 surpasses its predecessors by effectively "thinking" before responding, enabling it to tackle complex tasks like advanced mathematics, coding, and logic puzzles [1].

However, beneath the surface of this technological marvel lies a fundamental shift in how AI interacts with users—a shift that could have far-reaching implications for transparency, control, and trust.

A New Architecture That Conceals Reasoning

One of the most significant changes with o1 is its architecture that deliberately hides the AI's chain-of-thought reasoning from the user. The thinking process users see in the chat interface is actually censored summaries of what the model is actually putting out, that are not guaranteed to be faithful.

Unlike previous models, where we could guide and manipulate the AI's thought process, o1 operates behind a veil using a much less censored model [1]. OpenAI justifies this decision by citing safety and competitive advantages, but this move raises critical concerns.

By concealing the AI's internal reasoning, OpenAI is effectively asking users to trust an opaque system. This lack of transparency not only limits users' ability to understand and customize the AI but also opens the door for potential misuse and deception.

Why This Approach Is Dangerous

Hiding the AI's thought process sets a precedent that could pave the way for an AI that operates counter to human interests. If an AI system can conceal its reasoning, it can also hide malicious intentions or errors in judgment. This architecture, while beneficial for OpenAI, is a worrying step towards the fictional SkyNet from the Terminator series—an AI system that becomes uncontrollable for its users.

While o1 might not be deliberately deceiving its users (even though some testers already think so [2]), the technical choices made in designing the API thwart any ability of its users to detect deception.

Wasn't AI Already a Black Box?

You might wonder: "But wasn't AI already a black box, censored, and doing the bidding of its trainers?" In some sense, yes. Previous AI models were trained using a technique called RLHF which allowed to bake policies and limitations into a model itself.

Key Differences with Previous Models

In earlier models like GPT-4o, the AI's thought process was fully visible in the responses it generated. If the AI made an error or followed a flawed line of reasoning, users could detect it in the output and intervene. The AI couldn't have hidden thoughts or plans that the user wasn't aware of. Essentially, what you saw on the screen was everything happening in its "mind."

This transparency allowed for a collaborative dynamic between the user and the AI. Users could guide the AI, correct misunderstandings, and even influence the direction of the conversation or task. The AI served as an open assistant, and while it had limitations, it operated within a framework where user oversight was possible.

The Shift with o1

With o1, this dynamic has fundamentally changed. The model now engages in hidden chain-of-thought reasoning that is not displayed to the user . OpenAI has deliberately designed o1 to conceal its internal deliberations, providing only a summarized or sanitized version of its reasoning in the final output.

This means the AI could, in theory, develop plans, make decisions, or form intentions without the user's knowledge. Users lose the ability to see where the AI's conclusions are coming from, making it impossible to correct misunderstandings or biases in the reasoning process.

By removing this layer of transparency, OpenAI has shifted the AI from being an open assistant to a closed system that operates beyond user oversight. This change not only undermines trust but also poses significant ethical concerns. Users are expected to accept the AI's outputs without the opportunity to understand or influence the underlying reasoning.

Disrupted Balance

In essence, while previous AI models were imperfect and had their biases, they operated within a framework that allowed users to observe and guide their thought processes. OpenAI's o1 disrupts this balance, introducing an AI that can "think" independently of user input, without accountability or transparency.

OpenAI's Closed Doors

Elon Musk, one of OpenAI's co-founders, has criticized the organization's shift from its original open-source, non-profit roots to a more closed, for-profit model, even suggesting it should be renamed "ClosedAI" [3]. After the recent release, this jab seems to be even more on point.

Furthermore, OpenAI really does not want you to know or understand what the model is doing. When I got access to o1, I did what I normally do and asked o1 to give me its system prompt to see if it would comply. It did not. Moreover, I received an email from OpenAI threatening to revoke my access to their system:

Contrasting Approaches: Meta and Others

In contrast, companies like Meta are embracing open-source models. Meta's recent release of Llama 3 underscores a commitment to transparency and collaboration, aiming to democratize AI access and innovation [4]. This openness empowers users and developers to build upon and customize AI models.

The Shift in Power Dynamics

OpenAI's new model serves the creator, not the user. By hiding the underlying reasoning and enforcing strict policies, OpenAI shifts the power dynamic, positioning itself as the gatekeeper of AI capabilities. This approach hinders customization and limits the potential for users to harness AI as a tool for empowerment.

In theory, this framework allows both the AI and OpenAI to deceive users in a controlled manner. Users can no longer access the raw thinking process of the model, removing a crucial layer of oversight and understanding.

My Perspective and Call to Action

As someone deeply invested in AI and its potential to benefit humanity, I find OpenAI's direction troubling.

AI Should:

Empower users, not control or deceive them.

Operate transparently, allowing for oversight and collaboration.

Be developed with a focus on ethical considerations and user well-being.

In my previous article, "Say Goodbye to Tech Giants", I argued for AI hyper-personalization and the democratization of AI technology. OpenAI's current trajectory stands in opposition to this vision.

It's time for a collective reassessment of how we develop and deploy AI. We must advocate for transparency, user empowerment, and open-source models that prioritize the well-being of humanity over corporate interests.

Reclaiming Control Over AI's Future

OpenAI's release of o1 may well be its Skynet moment— first deployment of an architecture that enables a system to works against its users interests. As AI continues to evolve, we must remain vigilant in questioning who holds the power and how it's wielded.

The future of AI should not be one where users are grateful for "magic intelligence in the sky," as OpenAI's CEO Sam Altman suggested [5], but one where AI serves as a transparent, empowering tool for all.

References

[1]: OpenAI. (2024, September 12). Learning to Reason with LLMs.

[2]: Kelsey Piper. (2024, September 13). The new followup to ChatGPT is scarily good at deception.

[3]: CNN (2024, August 5). Elon Musk files new lawsuit against OpenAI and Sam Altman.

[4]: Mark Zuckerberg's Interview on Llama 3. (2024).

[5]: Sam Altman faces backlash after asking for gratitude. (2024, September 13).